Recently, DeepSeek open-sourced its file system Fire-Flyer File System (3FS). In the AI applications, enterprises need to deal with a large amount of unstructured data such as text, images, and videos. They also need to cope with the explosive growth of data volume. Therefore, the distributed file system has become a key storage technology for AI training.

In this article, we’ll compare 3FS with JuiceFS, analyzing their architectures, features, and use cases, while highlighting innovative aspects of 3FS.

Architecture comparison

3FS

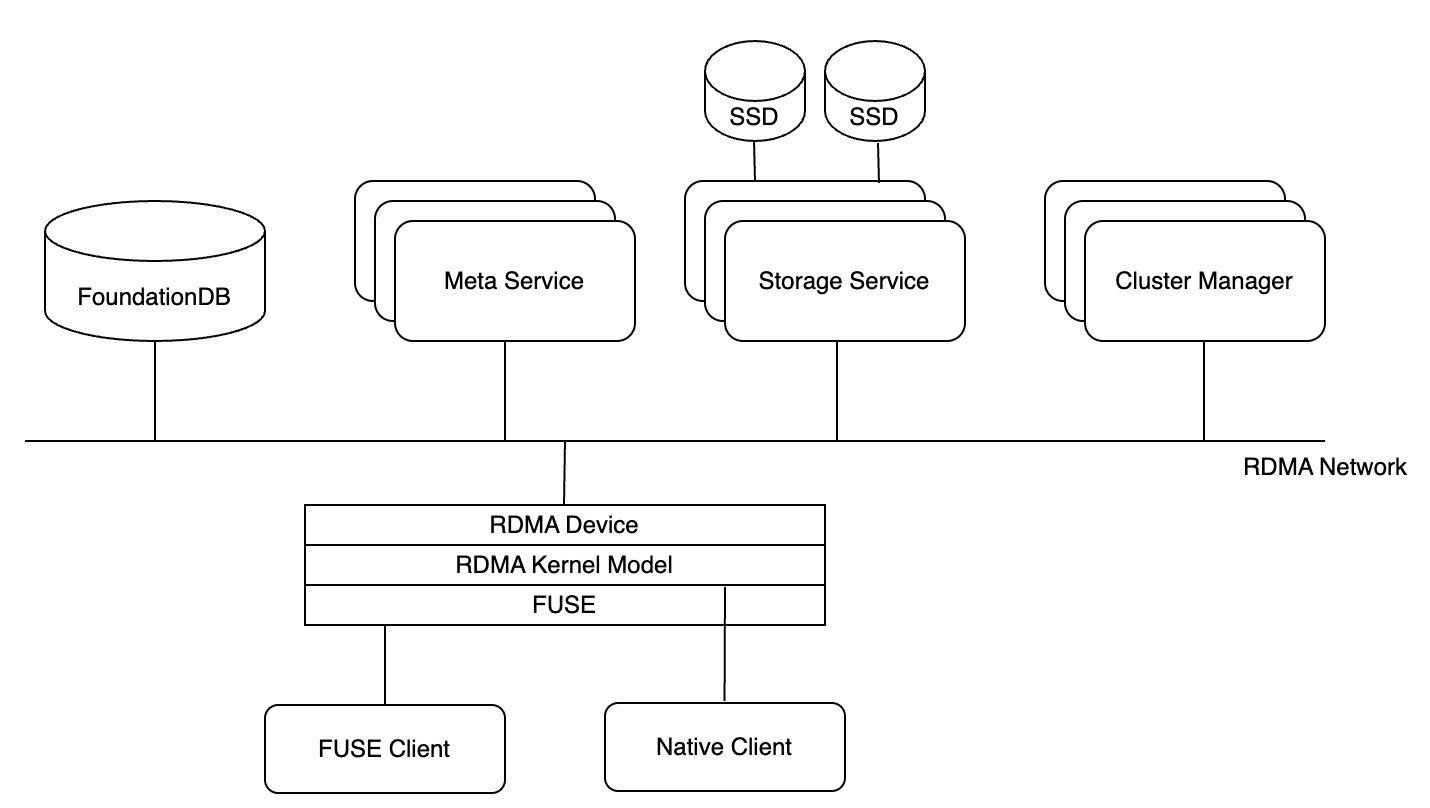

3FS is a high-performance distributed file system designed for AI training and inference workloads. It uses NVMe SSDs and RDMA networks to provide a shared storage layer. Its key components include:

- Cluster manager

- Metadata service

- Storage service

- Clients (FUSE client, native client)

All components communicate via RDMA. Metadata and storage services send heartbeat signals to the cluster manager, which handles node membership changes and distributes cluster configurations to other components. To ensure high reliability and avoid single points of failure, multiple cluster manager services are deployed, with one elected as the primary node. If the primary fails, another manager is promoted. Cluster configurations are stored in reliable distributed services like ZooKeeper or etcd.

For file metadata operations (such as opening or creating files/directories), requests are routed to the metadata service. These services are stateless and rely on FoundationDB—a transactional key-value database—to store metadata. Therefore, clients can flexibly connect to any metadata service. This design allows metadata services to operate independently without state information, thereby enhancing the scalability and reliability of the system.

The 3FS client provides two access methods: the FUSE client and native client. The FUSE client provides support for common POSIX interfaces and is easy to use. The native client provides higher performance, but users need to call the client API. This requires application modifications. We’ll analyze this in more detail below.

JuiceFS

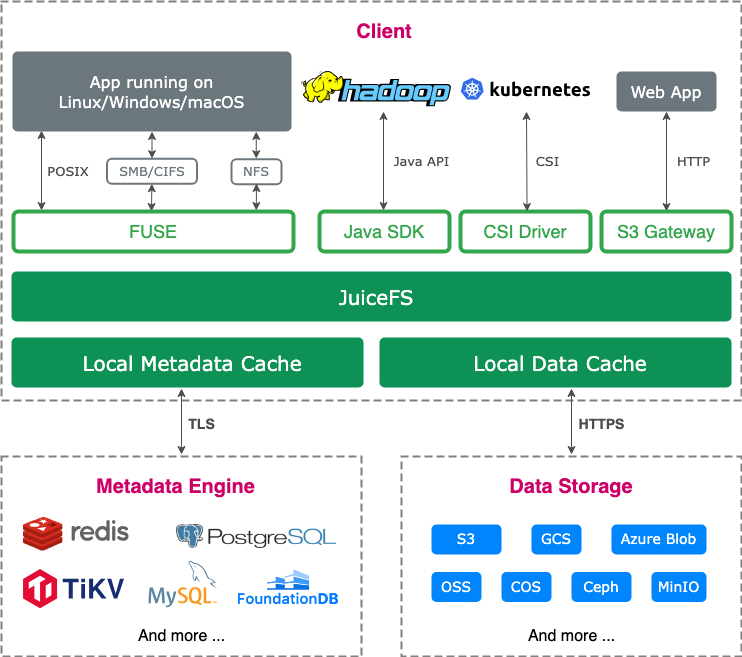

JuiceFS is a cloud-native distributed file system that stores data in object storage. The Community Edition, open-sourced on GitHub in 2021, integrates with multiple metadata services and supports diverse use cases. The Enterprise Edition, tailored for high-performance scenarios, is widely adopted in large-scale AI tasks, including generative AI, autonomous driving, quantitative finance, and biotechnology.

The JuiceFS architecture comprises three core components:

- The metadata engine: Stores file metadata, including standard file system metadata and file data indexes.

- Data storage: Generally, it’s an object storage service, which can be a public cloud object storage or an on-premises deployed object storage service.

- The JuiceFS client: Provides different access methods such as POSIX (FUSE), Hadoop SDK, CSI Driver, and S3 Gateway.

Architectural differences

Both 3FS and JuiceFS adopt a metadata-data separation design, with functionally similar modules. However, unlike 3FS and JuiceFS Enterprise Edition, JuiceFS Community Edition is compatible with multiple open-source databases to store metadata. All metadata operations are encapsulated in the client, and users no longer need to operate a stateless metadata service separately.

Storage module

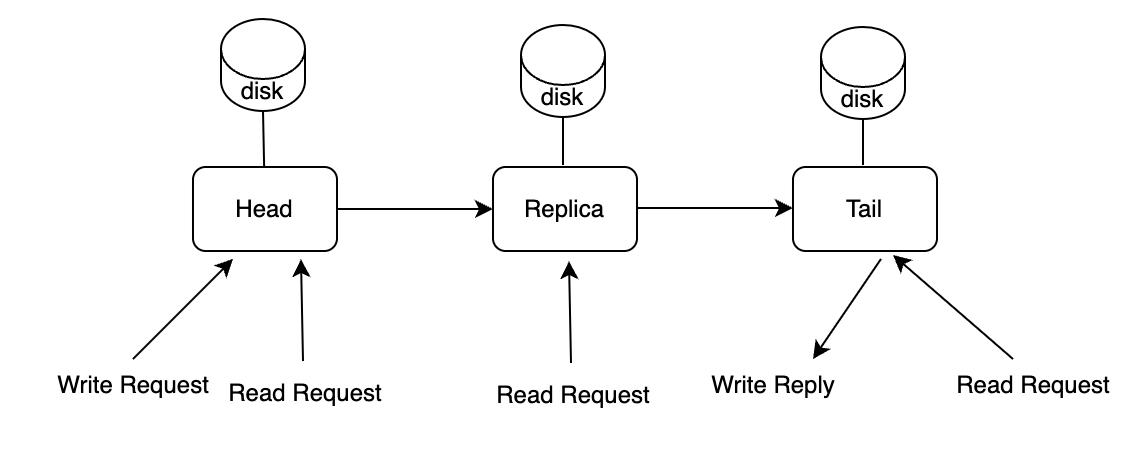

3FS employs many local SSDs for data storage. To ensure data consistency, it utilizes the Chain Replication with Apportioned Queries (CRAQ) algorithm. Replicas are organized into a chain, where write requests start from the head and propagate sequentially to the tail. A write operation is confirmed only after reaching the tail. For read requests, any replica in the chain can be queried. If a "dirty" (stale) node is encountered, it will communicate with the tail node to verify the latest state.

Writes propagate sequentially through the chain, introducing higher latency. If a replica in the chain becomes unavailable, 3FS moves it to the end of the chain and delays recovery until the replica is available. During recovery, the entire chunk is copied to the replica instead of applying incremental updates. To write all replicas and incrementally restore data synchronously, the writing logic will be much more complicated. For example, Ceph uses PG logs to ensure data consistency. Although the design of 3FS will cause write latency, for read-intensive AI workloads, this trade-off is acceptable, as read performance remains prioritized.

In contrast, JuiceFS uses object storage as its data storage solution, inheriting key advantages such as data reliability and consistency. The storage module provides a set of standard object operation interfaces (GET/PUT/HEAD/LIST), enabling seamless integration with various storage backends. Users can flexibly connect to different cloud providers' object storage services or on-premises solutions like MinIO and Ceph RADOS. JuiceFS Community Edition provides local cache to meet the bandwidth requirements in AI scenarios, and the Enterprise Edition uses distributed cache to meet the needs of larger aggregate read bandwidth.

Metadata module

In 3FS, file attributes are stored as key-value (KV) pairs within a stateless, high-availability metadata service, backed by FoundationDB—a distributed KV database originally open-sourced by Apple. FoundationDB ensures global ordering of keys and evenly distributes data across nodes via consistent hashing.

To optimize directory listing efficiency, 3FS constructs dentry keys by combining a "DENT" prefix with the parent directory's inode number and file name. For inode keys, it concatenates an "INOD" prefix with the inode ID, where the ID is encoded in little-endian byte order to ensure even distribution of inodes across multiple FoundationDB nodes. This design shares similarities with JuiceFS' approach of using Transactional Key-Value (TKV) databases for metadata storage.

JuiceFS Community Edition provides a metadata module that, similar to its storage module, offers a set of interfaces for metadata operations. It supports integration with various metadata services including:

- Key-value databases (Redis, TiKV)

- Relational databases (MySQL, PostgreSQL)

- FoundationDB

The Enterprise Edition employs a proprietary high-performance metadata service that dynamically balances data and hot operations based on workload patterns. This prevents metadata hotspots on specific nodes during large-scale training scenarios (such as those caused by frequent operations on metadata of adjacent directory files).

Client

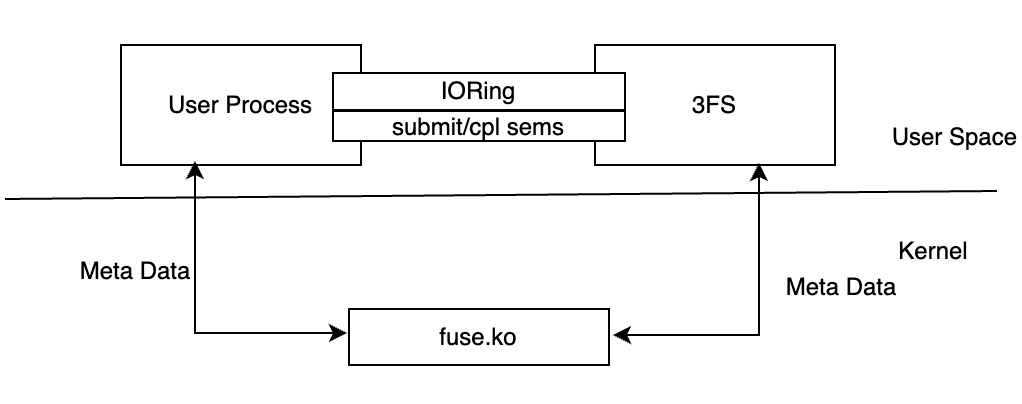

3FS provides both a FUSE client and a native client API (similar to Linux AIO) to bypass FUSE for direct data operations. These APIs eliminate data copying introduced by the FUSE layer, thereby reducing I/O latency and memory bandwidth overhead. Below is a detailed explanation of how these APIs enable zero-copy communication between user processes and the FUSE process.

Implementation overview

3FS uses hf3fs_iov to store shared memory attributes (such as size and address) and uses IoRing for inter-process communication.

When a user invokes the API to create an hf3fs_iov, shared memory is allocated in /dev/shm, and a symbolic link to this memory is generated in the virtual directory /mount_point/3fs-virt/iovs/.

When the 3FS FUSE process receives a request to create a symbolic link and detects that its path is within this virtual directory, it parses the shared memory parameters from the symbolic link’s name and registers the memory address with all RDMA devices (except IORing). The result returned by ibv_reg_mr is stored in the RDMABuf::Inner data structure for subsequent RDMA requests.

Meanwhile, IORing memory also uses hf3fs_iov for storage, but the corresponding symbolic link’s file name includes additional IORing-related information. If the FUSE process identifies that the memory is intended for IORing, it also creates a corresponding IORing within its own process. This setup allows both the user process and the FUSE process to access the same IORing.

Inter-process collaboration

To facilitate communication between processes, 3FS creates three virtual files in /mount_point/3fs-virt/iovs/ to share submitted semaphores of different priorities. After placing a request into the IORing, the user process uses these semaphores to notify the FUSE process of new requests. The IORing tail contains a completion semaphore, which the FUSE process informs the user process that a request has been completed via sem_post. This entire mechanism ensures efficient data communication and synchronization between the two processes.

FUSE client implementation

The 3FS FUSE client implements basic file and directory operations, whereas JuiceFS’ FUSE client offers a more comprehensive implementation. For example:

- In 3FS, file lengths are eventually consistent, meaning users might see incorrect file sizes during writes.

- JuiceFS updates file lengths immediately after a successful object upload.

- JuiceFS supports advanced file system features such as:

- BSD locks (flock) and POSIX locks (fcntl)

- The

file_copy_rangeinterface - The

readdirplusinterface - The

fallocateinterface

Beyond the FUSE client, JuiceFS also provides:

- The Java SDK

- The S3 Gateway

- The CSI Driver

Enterprise Edition features such as Python SDK, which runs the client in the user process to avoid FUSE overhead

File distribution comparison

3FS file distribution

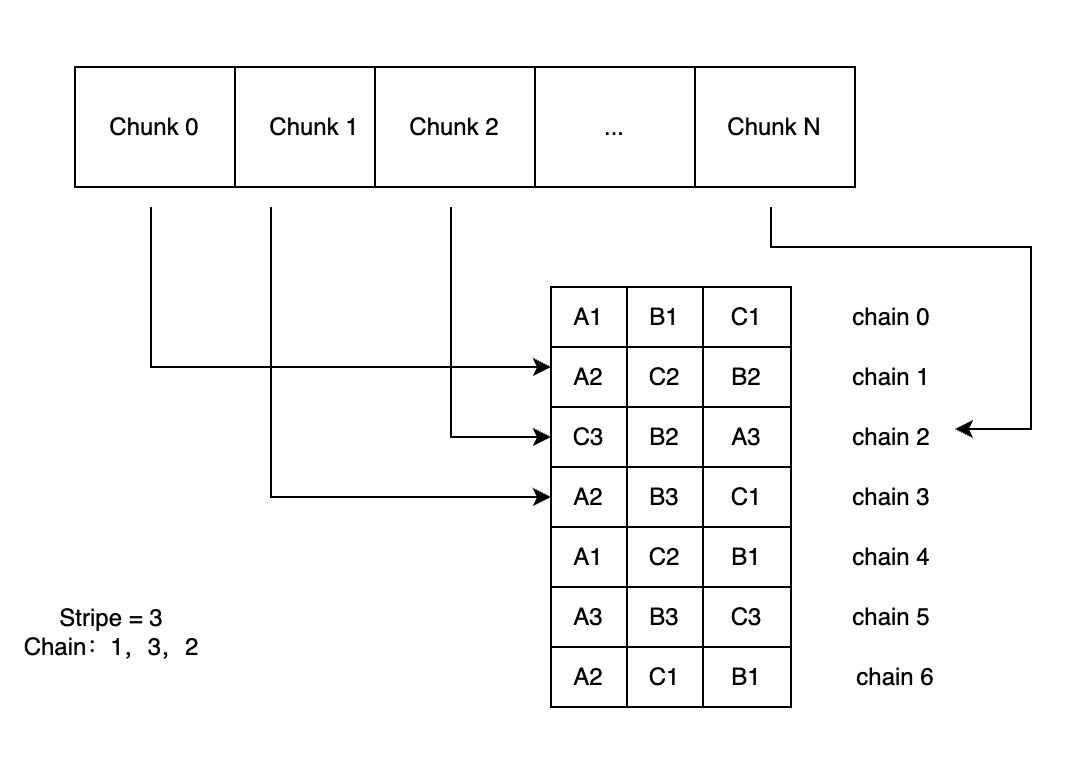

Because the chunks in 3FS are fixed, the client only needs to obtain the chain information of the inode once. Then it can calculate which chunks the request is located on based on the file inode and the offset and length of the I/O request, thus avoiding the need to query the database for each I/O. The index of the chunk can be obtained through offset/chunk_size. The index of the chain where the chunk is located is chunk_id%stripe. With the chain index, you can get the detailed information of the chain (such as which targets this chain consists of). Then, the client sends the I/O request to the corresponding storage service based on the routing information. After receiving the write request, the storage service writes the data to the new location in a copy-on-write (COW) manner. The original data is still readable before the referenced data is cleared.

To deal with the problem of data imbalance, the first chain of each file is selected in a round robin manner. For example, when stripe is 3, a file is created and the chains selected are: chain0, chain1, chain2. Then the chains of the next file are: chain1, chain2 and chain3. The system randomly sorts the 3 selected chains and then stores them in the metadata. The following figure is an example of the distribution of a file when stripe is 3. The order of the chains after random sorting is: 1, 3, 2.

JuiceFS file distribution

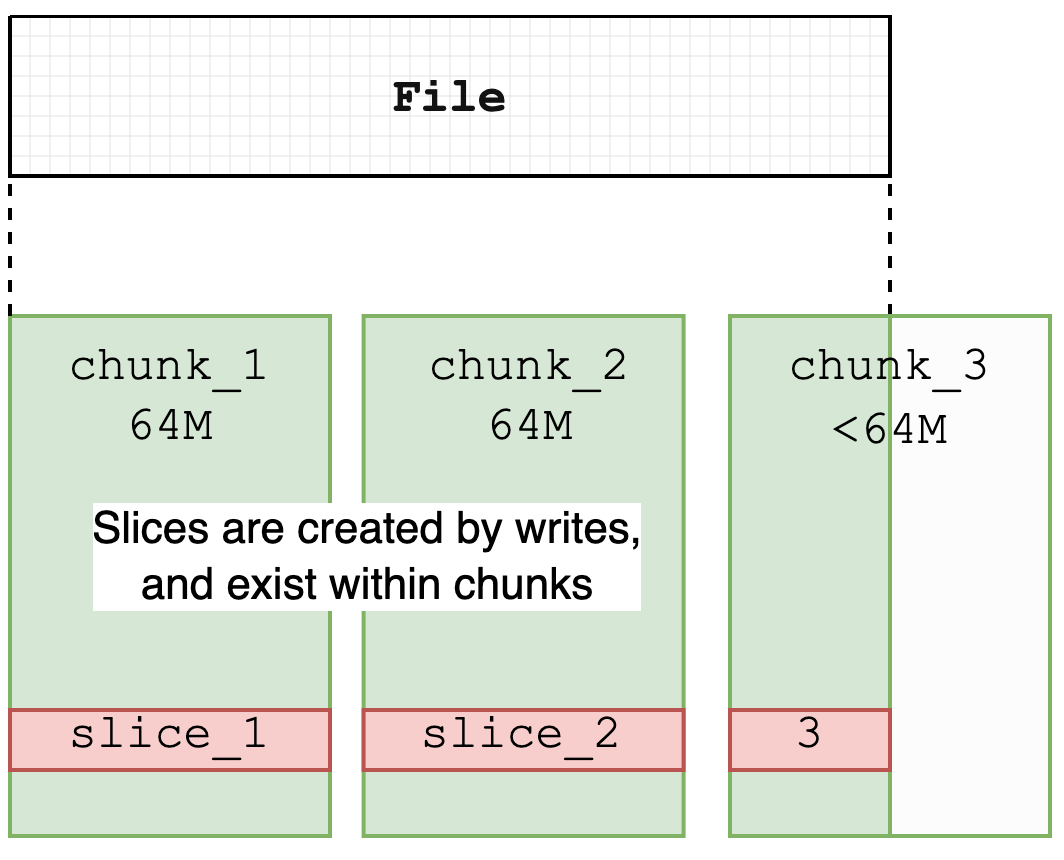

JuiceFS manages data blocks according to the chunk, slice, and block rules. The size of each chunk is fixed at 64 M, which is mainly used to optimize data search and positioning. The actual file write operation is performed on slices. Each slice represents a continuous write process, belongs to a specific chunk, and does not cross the chunk boundary, so the length does not exceed 64 M. Chunks and slices are mainly logical divisions, while block (default size is 4 M) is the basic unit of physical storage. This is used to realize the final storage of data in object storage and disk cache. For more details, see this document.

Slice in JuiceFS is a structure that is not common in other file systems. Its main functionality is to record file write operations and persist them in object storage. Object storage does not support in-place file modification, so JuiceFS allows file content to be updated without rewriting the entire file by introducing the slice structure. This is somewhat similar to the Journal File System, where write operations only create new objects instead of overwriting existing objects. When a file is modified, the system creates a new slice and updates the metadata after the slice is uploaded, pointing the file content to the new slice. The overwritten slice content is then deleted from the object storage through an asynchronous compression process. This causes the object storage usage to temporarily exceed the actual file system usage at certain moments.

In addition, all slices of JuiceFS are written once. This reduces the reliance on the consistency of the underlying object storage and greatly simplifies the complexity of the cache system, making data consistency easier to ensure. This design also facilitates the implementation of zero-copy semantics of the file system and supports operations such as copy_file_range and clone.

3FS RPC framework

3FS uses RDMA as the underlying network communication protocol, which is not currently supported by JuiceFS. The following is an analysis of this.

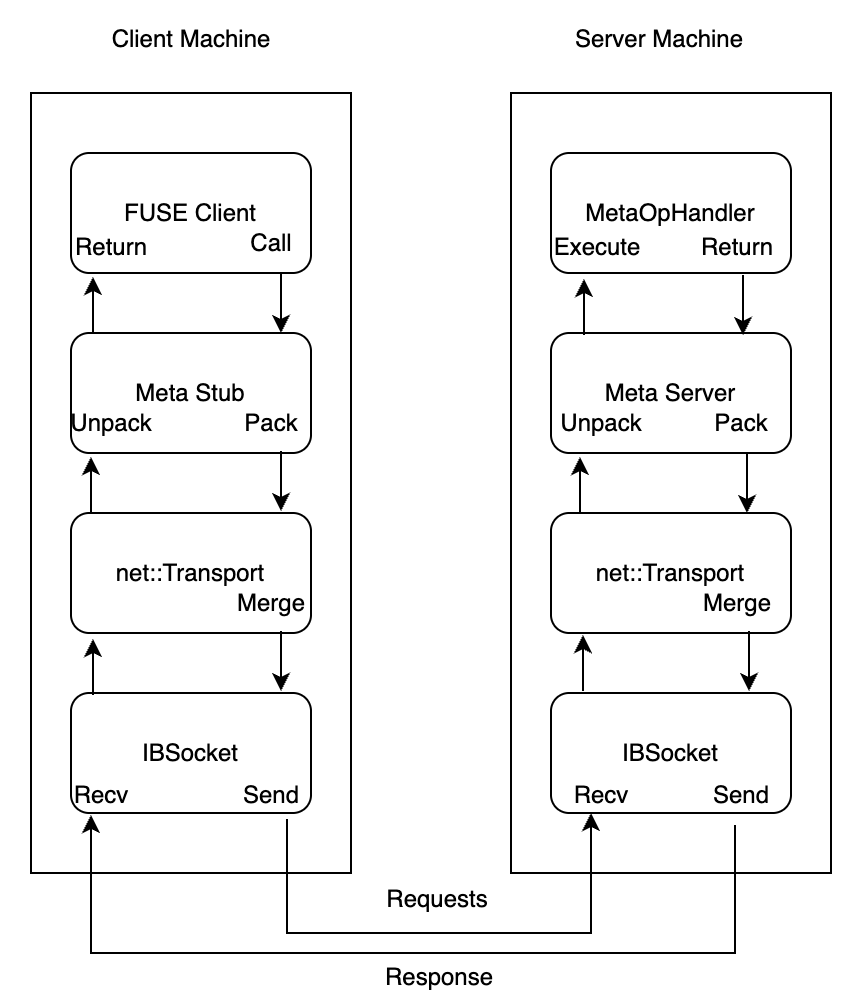

3FS implements an RPC framework to complete operations on the underlying IB network. In addition to network operations, the RPC framework also provides capabilities such as serialization and packet merging. Because C++ does not have reflection capabilities, 3FS also implements a reflection library through templates to serialize data structures such as requests and responses used by RPC. The data structures that need to be serialized only need to use specific macros to define the properties that need to be serialized. RPC calls are all completed asynchronously, so the serialized data can only be allocated from the heap and released after the call is completed. To increase the speed of memory allocation and release, caches are used for allocated objects.

The 3FS cache consists of two parts, a TLS queue and a global queue. No lock is required when obtaining the cache from the TLS queue; when the TLS cache is empty, a lock must be required to obtain the cache from the global queue. So in the best case, no lock is required to obtain the cache.

Unlike the load of I/O requests, the memory of cache objects is not registered with the RDMA device. Therefore, when the data arrives at the IBSocket, it’s copied to a buffer registered with the IB device. Multiple RPC requests may be merged into one IB request and sent to the peer. The following figure shows the RPC process of the FUSE client calling the Meta service.

Feature comparison

| Feature | 3FS | JuiceFS Community Edition | JuiceFS Enterprise Edition |

|---|---|---|---|

| Metadata | Stateless metadata service + FoundationDB | External database | Self-developed high-performance distributed metadata engine (horizontally scalable) |

| Data storage | Self-managed | Object storage | Object storage |

| Redundancy | Multi-replica | Provided by object storage | Provided by object storage |

| Data caching | None | Local cache | Self-developed high-performance multi-copy distributed cache |

| Encryption | Not supported | Supported | Supported |

| Compression | Not supported | Supported | Supported |

| Quota management | Not supported | Supported | Supported |

| Network protocol | RDMA | TCP | TCP |

| Snapshots | Not supported | Supports cloning | Supports cloning |

| POSIX ACL | Not supported | Supported | Supported |

| POSIX compliance | Partial | Fully compatible | Fully compatible |

| CSI Driver | No official support | Supported | Supported |

| Clients | FUSE + native client | POSIX (FUSE), Java SDK, S3 Gateway | POSIX (FUSE), Java SDK, S3 Gateway, Python SDK |

| Multi-cloud mirroring | Not supported | Not supported | Supported |

| Cross-cloud and cross-region data replication | Not supported | Not supported | Supported |

| Main maintainer | DeepSeek | Juicedata | Juicedata |

| Development language | C++, Rust (local storage engine) | Go | Go |

| License | MIT | Apache License 2.0 | Commercial |

Summary

For large-scale AI training, the primary requirement is high read bandwidth. To address this, 3FS adopts a performance-first design:

- Stores data on high-speed disks, requiring users to manage underlying storage.

- Achieves zero-copy from client to NIC, reducing I/O latency and memory bandwidth usage via shared memory and semaphores.

- Enhances small I/O and metadata operations with TLS-backed I/O buffer pools and merged network requests.

- Leverages RDMA for better networking performance.

While this approach delivers high performance, it comes with higher costs and greater maintenance overhead.

On the other hand, JuiceFS uses object storage as its backend, significantly reducing costs and simplifying maintenance. To meet AI workloads' read performance demands:

- The Enterprise Edition introduces:

- Distributed caching

- Distributed metadata service

- Python SDK

- Upcoming v5.2 adds zero-copy over TCP for faster data transfers.

JuiceFS also offers:

- Full POSIX compatibility and a mature open-source ecosystem.

- Kubernetes CSI support, simplifying cloud deployment.

- Enterprise features: quotas, security management, and disaster recovery.

If you have any questions for this article, feel free to join JuiceFS discussions on GitHub and community on Slack.